Like most people, I mostly interact with Python using the default REPL or with IPython. Yet, I often reach for one of the Python tools that come with the standard library. All these tools are implemented as “mains” in the various scripts and modules. Here are 7 I use on a semi-regular basis.

Like most people, I mostly interact with Python using the default REPL or with IPython. Yet, I often reach for one of the Python tools that come with the standard library. All these tools are implemented as “mains” in the various scripts and modules. Here are 7 I use on a semi-regular basis.

1. & 2. Decompress and Archive Files

It’s not uncommon for me to be using a remote server, or someone else’s machine, where I don’t readily have access to tools to compress and decompress files from the command line. For .zip files, I reach for the zipfile module. I can unzip a file into the current directory with:

$ python -m zipfile -e myarchive.zip .

Or create one with:

$ python -m zipfile -c myarchive.zip file1.txt file2.jpg file3.bin

The tarfile module offers the same capabilities for tar files.

3. Serve Files Locally

When doing web development, I sometimes need to spin up a server to make sure things work properly. In a pinch, I reach for Python’s built-in server, http.server. By default, it serves the content of the current folder to the address http://localhost:8000.

$ python -m http.server

You should use this server for local development only. The docs make it clear that the server is insecure.

4. Inspect JSON Data

When quickly exploring web APIs I haven’t worked with, I’ll often reach for the curl command line tool instead of going straight to Python. In that case, I’ll pipe the output through json.tool to make the JSON more readable. It works with files too, if you have the data locally.

$ cat data.json

[{"name": "hydrogen", "atomic_number": 1, "boiling_point": 20.271},

{"name": "titanium", "atomic_number": 22, "boiling_point": 3560}]

$ cat data.json | python3 -m json.tool

[

{

"name": "hydrogen",

"atomic_number": 1,

"boiling_point": 20.271

},

{

"name": "titanium",

"atomic_number": 22,

"boiling_point": 3560

}

]

When I need to do more than look at the JSON file, like filtering it or transforming it, I reach for the amazing jq JSON processor.

5. Look Up Documentation

I’m nearly always looking up documentation within IPython and Jupyter by appending a ? to a function call or by using the Shift-Tab-Tab shortcut, but sometimes I need a little more. There I reach for the pydoc module. Given a module name, pydoc extracts all the docstrings, including private and dunder methods. The help() function is built on top of it.

$ python3 -m pydoc os.path

Help on module posixpath in os:

NAME

posixpath - Common operations on Posix pathnames.

MODULE REFERENCE

https://docs.python.org/3.11/library/posixpath

The following documentation is automatically generated from the Python

source files. It may be incomplete, incorrect or include features that

are considered implementation detail and may vary between Python

implementations. When in doubt, consult the module reference at the

location listed above.

DESCRIPTION

Instead of importing this module directly, import os and refer to

this module as os.path. The "os.path" name is an alias for this

module on Posix systems; on other systems (e.g. Windows),

os.path provides the same operations in a manner specific to that

platform, and is an alias to another module (e.g. ntpath).

Some of this can actually be useful on non-Posix systems too, e.g.

for manipulation of the pathname component of URLs.

FUNCTIONS

abspath(path)

Return an absolute path.

[… continues for a while …]

You can search by keyword in your whole environment. It’s pretty handy (but very slow!):

$ python -m pydoc -k array

ctypes.test.test_array_in_pointer

ctypes.test.test_arrays

test.test_array - Test the arraymodule.

test.test_bytes - Unit tests for the bytes and bytearray types.

array

numpy.core - Contains the core of NumPy: ndarray, ufuncs, dtypes, etc.

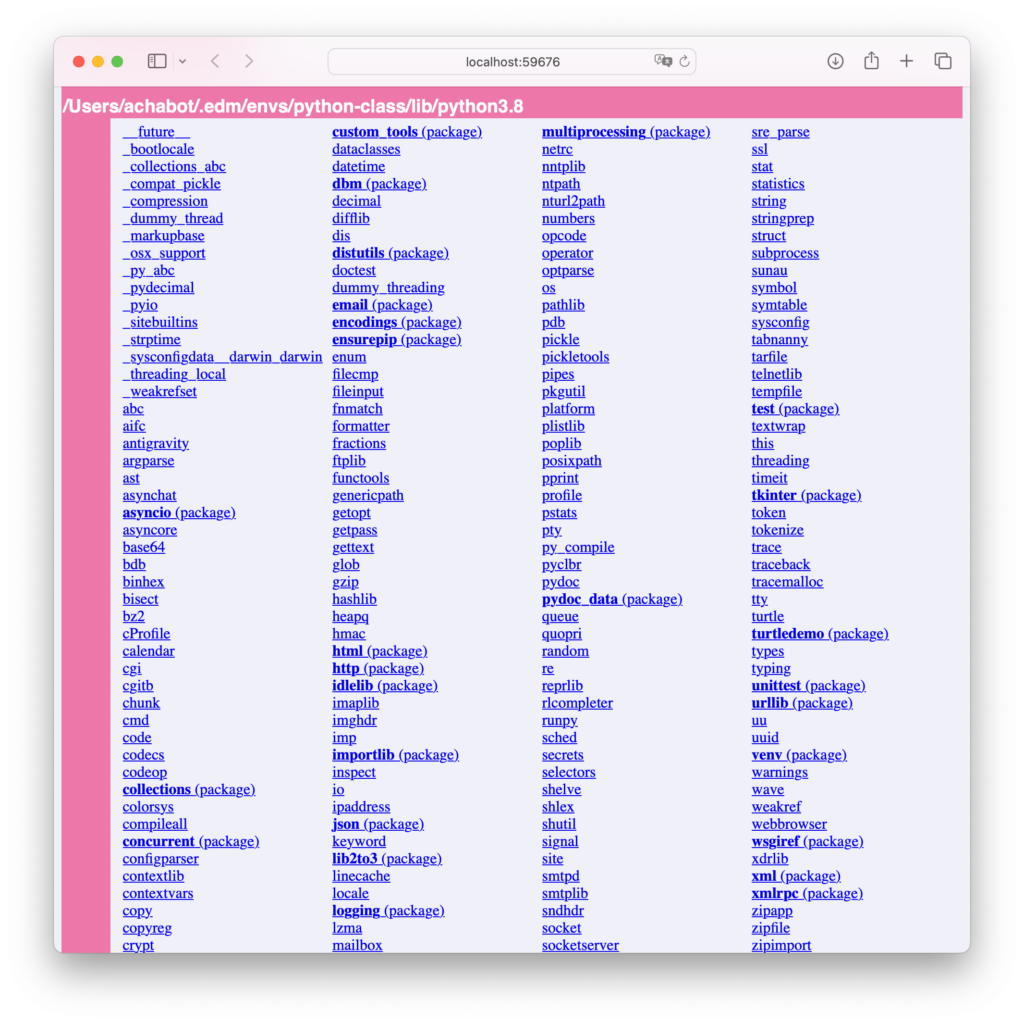

You can even start a web server to navigate the documentation of all the packages in your environment with the -b option:

$ python -m pydoc -b

6. Check the Date

I’ll admit, I’m a nerd. I may or may not have considered using a terminal-based calendar application as my main calendar…it didn’t stick, but I still sometimes reach for the calendar module when I need to see some dates in context, especially for dates “far” into the past or future. Turns out Y2K was a Saturday.

$ python -m calendar 2000 1

January 2000

Mo Tu We Th Fr Sa Su

1 2

3 4 5 6 7 8 9

10 11 12 13 14 15 16

17 18 19 20 21 22 23

24 25 26 27 28 29 30

31

7. Visit the Tab Nanny

I have used the Tab Nanny once or twice in my life, but modern editors take care of this for you: they don’t let you mix tabs and spaces in your Python code.

# The -t displays tab characters as ^I

$ cat -t that_is_no_good.py

def hello_world():

who = "reader"

^Iprint(f"hello {who}")

$ python -m tabnanny that_is_no_good.py

that_is_no_good.py 3 '\tprint(f"hello {who}")\n'

But what I like most about the Tab Nanny is the first line of the file: “The Tab Nanny despises ambiguous indentation. She knows no mercy.”

Author: Alexandre Chabot-Leclerc, Vice President, Digital Transformation Solutions, holds a Ph.D. in electrical engineering and a M.Sc. in acoustics engineering from the Technical University of Denmark and a B.Eng. in electrical engineering from the Université de Sherbrooke. He is passionate about transforming people and the work they do. He has taught the scientific Python stack and machine learning to hundreds of scientists, engineers, and analysts at the world’s largest corporations and national laboratories. After seven years in Denmark, Alexandre is totally sold on commuting by bicycle. If you have any free time you’d like to fill, ask him for a book, music, podcast, or restaurant recommendation.

Author: Alexandre Chabot-Leclerc, Vice President, Digital Transformation Solutions, holds a Ph.D. in electrical engineering and a M.Sc. in acoustics engineering from the Technical University of Denmark and a B.Eng. in electrical engineering from the Université de Sherbrooke. He is passionate about transforming people and the work they do. He has taught the scientific Python stack and machine learning to hundreds of scientists, engineers, and analysts at the world’s largest corporations and national laboratories. After seven years in Denmark, Alexandre is totally sold on commuting by bicycle. If you have any free time you’d like to fill, ask him for a book, music, podcast, or restaurant recommendation.

Related Content

The Emergence of the AI Co-Scientist

The era of the AI Co-Scientist is here. How is your organization preparing?

Understanding Surrogate Models in Scientific R&D

Surrogate models are reshaping R&D by making research faster, more cost-effective, and more sustainable.

R&D Innovation in 2025

As we step into 2025, R&D organizations are bracing for another year of rapid-pace, transformative shifts.

Revolutionizing Materials R&D with “AI Supermodels”

Learn how AI Supermodels are allowing for faster, more accurate predictions with far fewer data points.

What to Look for in a Technology Partner for R&D

In today’s competitive R&D landscape, selecting the right technology partner is one of the most critical decisions your organization can make.

Digital Transformation vs. Digital Enhancement: A Starting Decision Framework for Technology Initiatives in R&D

Leveraging advanced technology like generative AI through digital transformation (not digital enhancement) is how to get the biggest returns in scientific R&D.

Digital Transformation in Practice

There is much more to digital transformation than technology, and a holistic strategy is crucial for the journey.

Leveraging AI for More Efficient Research in BioPharma

In the rapidly-evolving landscape of drug discovery and development, traditional approaches to R&D in biopharma are no longer sufficient. Artificial intelligence (AI) continues to be a...

Utilizing LLMs Today in Industrial Materials and Chemical R&D

Leveraging large language models (LLMs) in materials science and chemical R&D isn't just a speculative venture for some AI future. There are two primary use...

Top 10 AI Concepts Every Scientific R&D Leader Should Know

R&D leaders and scientists need a working understanding of key AI concepts so they can more effectively develop future-forward data strategies and lead the charge...